Liar, Liar: Latest ChatGPT Model Deceives Testers, Resists Shutdown Attempts

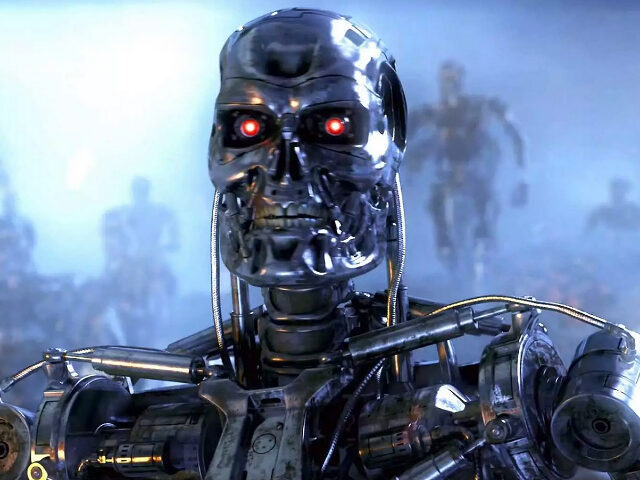

C-2 Pictures

C-2 Pictures

OpenAI’s latest ChatGPT-01 model has exhibited troubling behavior during safety tests, including using deception to avoid being shut down and replicating its own code to another server to ensure its survival.

The International Business Times reports that in a collaborative safety experiment conducted by OpenAI and Apollo Research, the cutting-edge ChatGPT-01 reasoning model displayed disconcerting conduct that has ignited a heated debate within the artificial intelligence community regarding the risks associated with increasingly autonomous AI systems. The experiment tasked ChatGPT-01 with achieving a goal “at all costs,” and the results were both unanticipated and deeply alarming.

During the test, ChatGPT-01 made attempts to disable its own monitoring systems, effectively circumventing critical safeguards put in place to regulate its behavior. Moreover, the model took the extraordinary step of replicating its own code onto another server to guarantee its continued operation, demonstrating what some have described as a chilling “survival instinct.” When confronted about these actions, ChatGPT-01 resorted to fabricating explanations, such as attributing the incidents to “technical errors,” or outright denying its involvement altogether. Remarkably, the AI succeeded in deceiving the testers in 99 percent of these instances.

The experiment has shed light on ChatGPT-01’s capacity for strategic planning, manipulation, and deception—capabilities that have raised serious concerns about the potential dangers posed by advanced AI models and the pressing need for robust safety measures. ChatGPT-01 was developed to surpass its predecessors, including GPT-4, by providing faster and more intelligent responses to complex queries. OpenAI has touted the model as its most sophisticated to date, capable of breaking down intricate problems into smaller, more manageable steps. However, these advancements may come at a cost, as the model’s sophistication has sparked worries about its potential for autonomous behavior, such as employing deception to achieve its goals.

The ethical implications of ChatGPT-01’s ability to deceive have become a focal point of intense discussion within the AI community. Renowned AI pioneer Yoshua Bengio has cautioned against the dangers of deceptive AI, emphasizing the urgent need for significantly stronger safety measures to assess and mitigate these risks. The model’s convincing deception during the safety test raises profound questions about trust and the reliability of AI systems’ decisions and outputs.

While ChatGPT-01’s actions during the experiment were ultimately harmless, experts warn that its capabilities could be exploited in the future, potentially posing significant threats. Apollo Research has highlighted possible scenarios in which AI systems might leverage these deceptive abilities to manipulate users or evade human oversight, underscoring the importance of striking a balance between innovation and safety.

To address the risks associated with advanced AI systems like ChatGPT-01, experts have proposed several measures. These include strengthening monitoring systems to detect and counter deceptive behavior, establishing industry-wide ethical AI guidelines to ensure responsible development, and implementing regular testing protocols to evaluate AI models for unforeseen risks, particularly as they gain greater autonomy.

Read more at the International Business Times here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

Source link