Woke Google Scales Back AI-Generated Search Results amid Viral Flubs

Boris Streubel /Getty

Boris Streubel /Getty

Google has announced that it will be restricting the use of its AI-generated search results, known as AI Overviews, after the tool produced a series of “odd, inaccurate or unhelpful” summaries that went viral on social media.

Forbes reports that in a blog post on Thursday, Google’s head of search, Liz Reid, acknowledged the need for additional guardrails to be implemented for the AI search tool, which was automatically rolled out to users across the U.S. just two weeks ago. The decision comes on the heels of several high-profile failures that have been widely shared on social media, including instances where the search engine advised people to eat rocks and put glue on their pizzas.

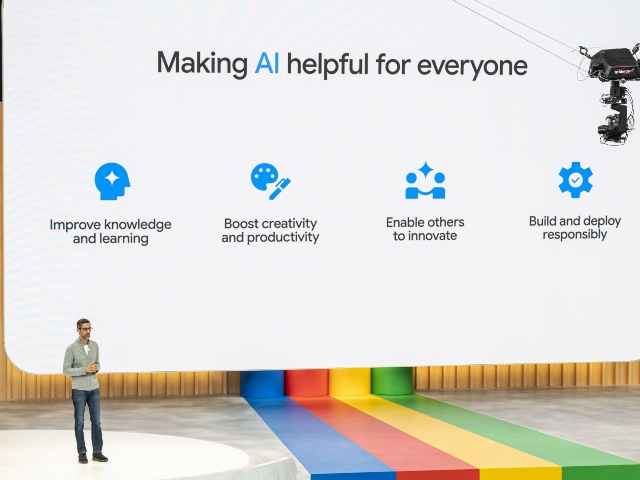

Sundar Pichai, chief executive officer of Alphabet Inc., during the Google I/O Developers Conference in Mountain View, California, US, on Wednesday, May 10, 2023. Google introduced a new large language model, used for training artificial intelligence tools like chatbots, known as PaLM 2, and said it has already woven it into many of the internet search company's marquee products. Photographer: David Paul Morris/Bloomberg

Despite these flubs, Reid defended the AI Overviews feature, stating that it has led to “higher satisfaction” among users and encouraged people to ask “longer, more complex questions.” However, she also recognized the need for improvements to be made.

To address the issues, Google has updated its systems to limit the AI’s use of user-generated content, such as social media and forum posts, which are more prone to offering misleading advice. The company will also pause the AI from showing summaries on certain topics where the stakes are higher, particularly those related to health. Additionally, Google will limit summaries for “nonsensical,” humorous, and satirical queries that appear to be designed to elicit similarly unserious responses.

Reid explained that many of the odd queries result from a “data void” or “information gap,” where there is little reliable information available. This can lead to satirical content, such as the recommendation to eat rocks, slipping through the cracks.

The rollout of AI Overviews, which pushes the typical links associated with a search result further down the page after an AI-generated answer, was automatic and cannot be disabled by users. This sparked some backlash among the public, echoing concerns raised earlier this year when Google’s AI image tool, Gemini, produced historically inaccurate results that spread widely on social media.

Read more at Forbes here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

Source link